A stunning total of 2.8 billion people use Facebook or one of its services (which include WhatsApp, Instagram, and Messenger), and 2.2 billion go on at least one of these platforms every day. It’s not like people are using these platforms just to chitchat: Facebook helps people find apartments and old friends, and on a vaster scale, it’s been involved in starting revolutions and helping to elect America’s current president. But how does Facebook orchestrate who and what we see? The answer lies with algorithms – and perhaps by understanding these algorithms better, we can gain a new understanding of just how extensively social media distorts and curates our worldview.

◊

Since it debuted on a Harvard computer, Facebook has grown into an almost unfathomably powerful entity, one capable of influencing everything from our social lives to our political views.

A platform this powerful should be questioned and held accountable for its actions, right? Well, that’s easier said than done. Still, by exploring some of the ways that Facebook selects and filters out the content we see, perhaps we can be a bit more critical of what appears on it and other social media, and thereby stay more accurately informed about the issues that matter.

Source: Song_about_summer, via Adobe Stock

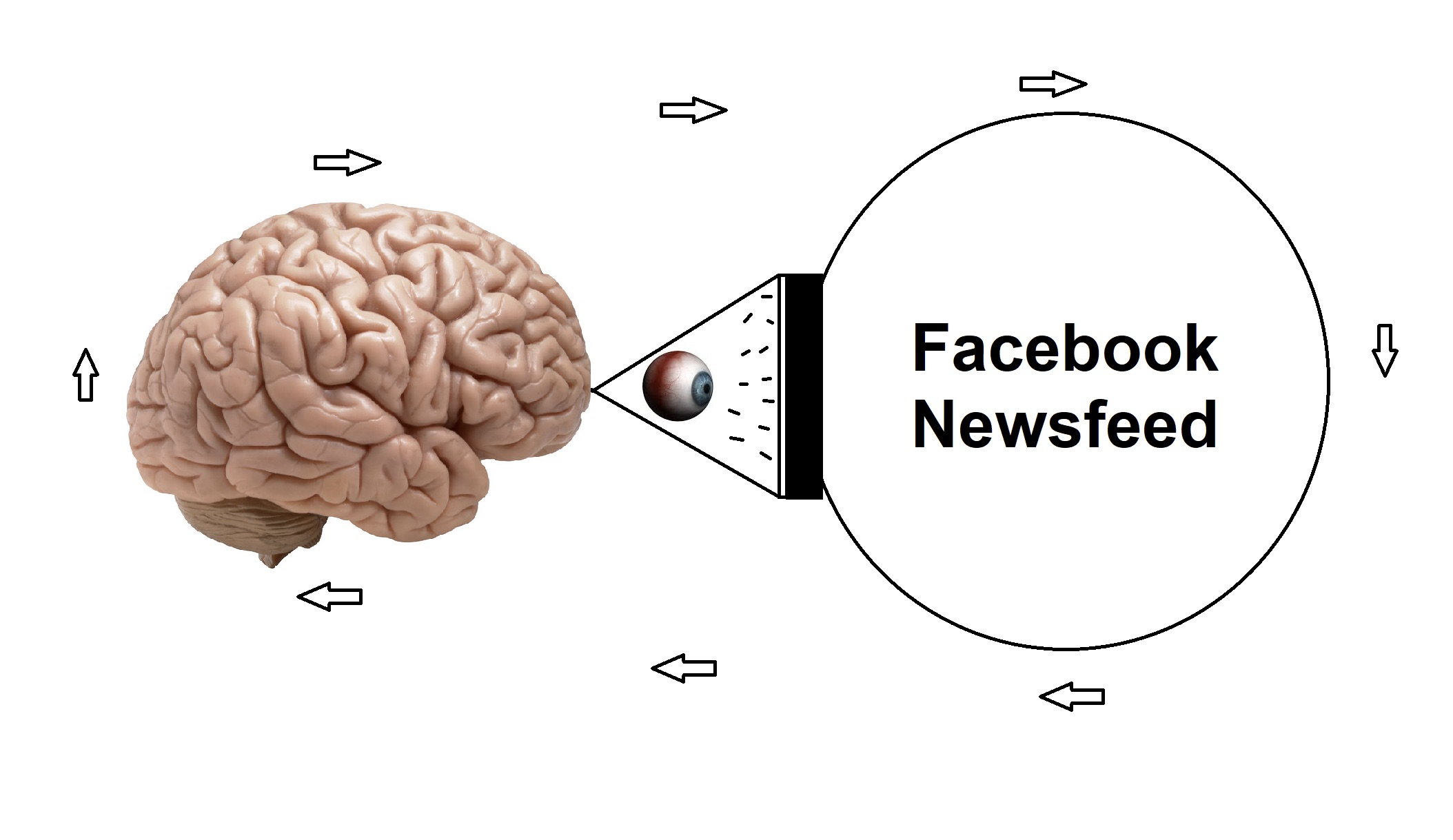

The content we see on Facebook relies entirely on its complex and enigmatic algorithms, so developing some understanding of what those algorithms are and how they use our information is a vital first step to ensuring that we run our social media accounts, not the other way around.

Origins of the Algorithms: Alan Turing and Beyond

Algorithms are, on the most basic level, sequences of steps dedicated to completing a certain activity. The concept of an “algorithm” is very old; it probably originated in Sumer with the Babylonians (who also devised the first counting system) around 1600 BC. The actual word “algorithm” likely refers to 9th century Persian mathematician Abu Abdullah Muhammad ibn Musa Al-Khwarizmi, who was a forefather of algebra.

Over the next several centuries, algorithms would continue to grow and evolve. In 1936, the first modern algorithm was created by Alan Turing, whose Turing machine – a model with the hypothetical ability to simulate any algorithm’s logic – would alter the course of mathematics, eventually leading to the first computer.

Today, algorithms are everywhere, controlling our traffic, our purchases, and even our dating lives. Despite their massive influence, we tend to forget about them, although “these bite-sized chunks of maths are essential to our daily lives” and are “[the secrets] to the digital world,” according to Professor Marcus du Satutoy in the documentary The Secret Rules Of Modern Living: Algorithms.

Modern algorithms that underlie social media are not stagnant. They’re constantly evolving, and they vary from site to site. Specifically, Facebook has its own unique algorithms, different from Pinterest, LinkedIn, Google, and all the other social media feeds you browse through each day.

How Facebook’s Algorithms Work: A Brief, Non-Mathematical Overview

When you log onto Facebook, you’re definitely not simply seeing the most recent posts. You’re actually seeing a confection of posts specifically selected by Facebook – or specifically, several of Facebook’s algorithms.

Facebook’s algorithms have been changing since the site’s inception, but the most recent update (which we’ll tackle here) appeared in 2018. This algorithm is very complex, and inaccessible to most people other than Mark Zuckerberg and a few others at Facebook, but we do know some things about it. Firstly, we know that it’s been designed to prioritize posts that Facebook believes will matter to users. This means that your News Feed will feature posts that Facebook’s algorithm believes will generate conversation.

.jpg)

Mark Zuckerberg (Source: Wikimedia Commons)

On a more detailed level, according to brandwatch.com, Facebook’s newest algorithm values four things when picking the posts that appear at the top of your feed:

- Inventory. This term refers to all the information on Facebook, which the site’s algorithm filters through and records.

- Signals. Facebook uses a list of criteria called signals to help choose what content to post. This list includes comments and likes, shares, the time the post was published, the type of content it is, and more.

- Predictions. Facebook’s algorithm tracks your activity and uses your previous actions and data collected from signals to select content it thinks you’ll engage with more, thus weeding out content that probably won’t interest you.

- Score. Facebook assigns every piece of content a different score depending on its “relevancy” to the user, which it judges by combining the information from signals using an algorithm. The same content receives a different score for different people, meaning that nobody sees exactly the same thing on their feed.

On occasion, Facebook’s latest News Feed algorithm takes on a more direct tactic; for example, in May 2019, Facebook announced that it would be surveying users about their closest friends, then matching this data with information gathered from signals.

Across the board, Facebook’s new algorithm is supposed to combat fake news by favoring social interaction and close friends. This has made it harder for many brands, especially those without responsive social followings, to sell content. Sounds great, doesn’t it? Why wouldn’t you want to see more posts from your close friends and from accounts you spend a lot of time on, and fewer from advertisers?

Well, there are a few disturbing things about the new algorithm. One of the main problems is that Facebook is so much more than a social hangout. For many people, it’s their main source of news. But since Facebook prioritizes posts that tend to generate engagement, the facts can get lost in the fray, pushed out by more controversial content masquerading as truth. This can create feedback loops that prioritize incendiary information, regardless of whether it’s factual or biased. Thanks to the algorithm, echo chambers are created, and these feedback loops form the illusion of majority opinions while actually they often have no basis in reality.

The Dark Side of Facebook: Political Corruption and Data Farming

In early 2018, the Facebook-Cambridge Analytica data scandal smashed open the already fragile idea that our data was ever safe or private on the Internet. The scandal revealed that Cambridge Analytica, a United Kingdom-based political consulting firm, harvested millions of people’s data from Facebook – and used it for political purposes, including the Trump campaign.

We’ve known that Facebook has had access to our data since its inception. For example, its algorithm allows Facebook to track cookies, which means that if you’re logged onto Facebook while browsing other sites, Facebook can track your activity on those sites.

Facebook has also invested in facial recognition technologies, which it uses to suggest whom to tag – and which enable it essentially to recognize our faces wherever they appear. Recently, Facebook lost a $35 billion suit for misuse of facial recognition technology in Illinois.

“This is how the internet business works now: If you need to access new populations, you have to deal with Facebook, and buy your way in.” —John Herrman, “We’re Stuck With the Tech Giants. But They’re Stuck With Each Other,” The New York Times

Unfortunately, though citizens occasionally win suits against Facebook when the site involuntarily takes their data, it’s probably safe to say that no information you put on Facebook is definitively private. Most of it winds up floating into the ether of the Internet – and it often settles in the hands of ad companies.

The question of whether or not Facebook actually sells this data to ad companies and other buyers has been a hugely contentious topic, particularly since the Cambridge Analytica scandal broke. According to Mark Zuckerberg, Facebook does not sell users’ data, despite raking in $40 billion per year from advertisers.

(Photo by Firmbee.com on Unsplash)

However, the topic of just how safe our data is on Facebook is still extremely controversial, as many companies (like Cambridge Analytica) have been able to hack into Facebook using things like personality quizzes and other forms of data harvesting. Although Facebook might not sell your data, don’t get caught thinking it’s a safe place to post confidential information.

Facebook Follies: Social Media’s Social Consequences

Many of Facebook’s critics assert that its algorithm promotes feedback loops and illusory, insubstantial connections.

“The short-term, dopamine-driven feedback loops that we have created are destroying how society works. No civil discourse. No cooperation. Misinformation. Mistruth. And it’s not an American problem. This is not about Russian ads. This is a global problem. So we are in a really bad state of affairs right now, in my opinion.” —Chamath Palihapitiya, former senior Facebook executive

The documentary “Facebook Follies: The Unexpected Consequences of Social Media” delves into the consequences of some of these feedback loops and Facebook-born connections, in all their chaos, distortion, and glory. In one particularly dire example of the consequences of using Facebook, a user loses her health insurance after posting photos of a family vacation online.

(Source: Avimanyu786 [detail], via Wikimedia Creative Commons)

Sometimes, the algorithm works in people’s favor, though. The documentary also features Facebook users who have found their fiancés (or hundreds of dates) and even started revolutions using the platform.

Essentially, social media and its algorithms are neutral forces, which tend to reflect human nature more than they distort it. Inevitably, however, In the end, Facebook’s algorithm adapts the corrupt beliefs, stereotypes, and desires of the people and corporations who make and use them, unless those flaws are actively addressed.

Break the Algorithm: Be Skeptical, Do Your Research, Get Offline

Across the board, people seem to be growing more and more skeptical of Facebook and other social media behemoths’ invisible, inscrutable algorithms. In September, eight states opened an antitrust investigation into Facebook, citing its serial acquisition of other social media platforms. Facebook itself has been the subject of countless lawsuits.

“Technologies do not, in themselves, change anything, but rather are socially constructed and deployed... The dyad of big data and algorithms can enable new cultural and social forms, or they can be made to reinforce the most egregious aspects of our present social order…What is truly new about this configuration is that we have a choice.” —Mirko Tobias Schäfer, The Datafied Society: Studying Culture Through Data,

Knowing all this, you might have the subversive desire to delete your Facebook account and retreat to a cabin in the woods. While this is tempting, it’s not entirely realistic for most of us. For better or worse, Facebook is a part of our world.

There are ways to hack the Facebook system, though. For example, you should think twice about the so-called “facts” you see on Facebook News Feed. Double and triple check the sources of news and memes. Do more background research on how social media works (for example, try watching the MagellanTV documentary Facebook Follies, which features information from credible experts). Make an effort to follow groups outside your political orientation and social sphere. Question everything that Facebook tells you.

“Ordinary people [can] reflect on the normative decisions made by algorithmic power and, based on this, consciously transform their ethical selves.” —João Carlos Megalhães, “Do Algorithms Shape Character? Considering Algorithmic Ethical Subjectification”

Importantly, realize that you are capable of hacking Facebook’s algorithm. Analyze what you see on Facebook, and watch out for implicit biases that might be reflected in the content that appears thanks to Facebook’s algorithm. Choose to engage with factual and unbiased information.

Finally, there’s always the possibility that it might be time to get offline . . . at least for a while.

Ω

Title image: Mark Zuckerberg in San Jose by Anthony Quintano via Wikimedia Commons.